We are pleased to announce that a groundbreaking conference paper titled “Robust Graph Contrastive Learning with Information Restoration” has been accepted for publication in the August 2025 issue of the IEEE Transactions on Information Forensics and Security. The paper was completed through collaboration between our faculty member, Dr. Yulin Zhu (first author), The Hong Kong Polytechnic University, and Washington University in St. Louis, USA.

- Yulin Zhu, Xing Ai, Yevgeniy Vorobeychik, Kai Zhou. Robust Graph Contrastive Learning with Information Restoration. (2025). IEEE Transactions on Information Forensics and Security [Accepted]

Paper Abstract

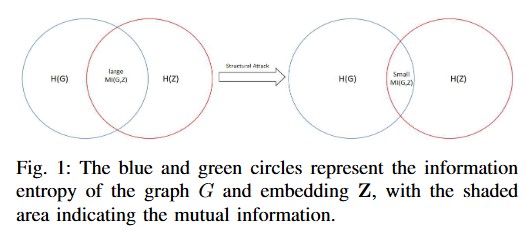

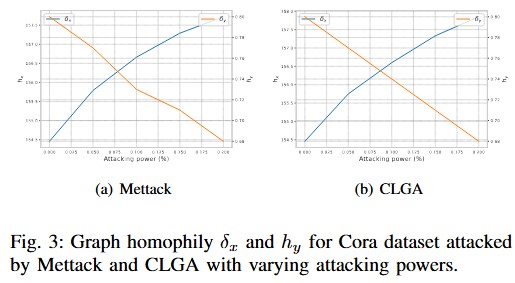

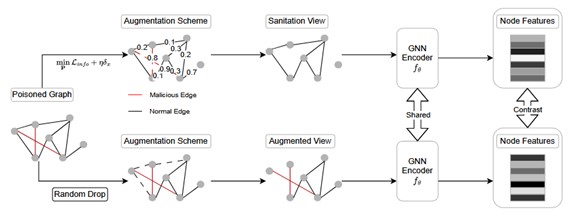

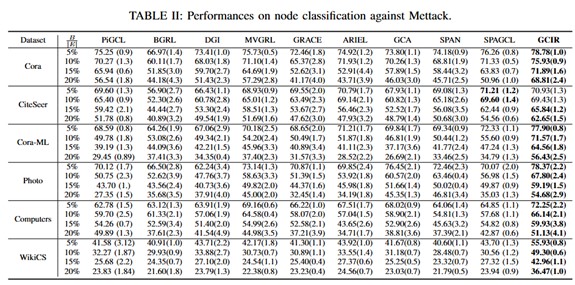

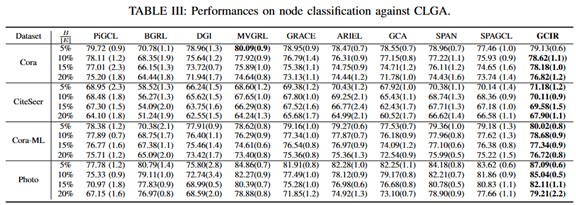

The graph contrastive learning (GCL) framework has gained remarkable achievements in graph representation learning. However, similar to graph neural networks (GNNs), GCL models are susceptible to graph structural attacks. As an unsupervised method, GCL faces greater challenges in defending against adversarial attacks. Furthermore, there has been limited research on enhancing the robustness of GCL. To thoroughly explore the failure of GCL on the poisoned graphs, we investigate the detrimental effects of graph structural attacks against the GCL framework. We discover that, in addition to the conventional observation that graph structural attacks tend to connect dissimilar node pairs, these attacks also diminish the mutual information between the graph and its representations from an information-theoretical perspective, which is the cornerstone of the high-quality node embeddings for GCL. Motivated by this theoretical insight, we propose a robust graph contrastive learning framework with a learnable sanitation view that endeavors to sanitize the augmented graphs by restoring the diminished mutual information caused by the structural attacks. Additionally, we design a fully unsupervised tuning strategy to tune the hyperparameters without accessing the label information, which strictly coincides with the defender’s knowledge. Extensive experiments demonstrate the effectiveness and efficiency of our proposed method compared to competitive baselines.

The research team members

Hong Kong Chuhai College

- Dr. Yulin Zhu — Assistant Professor, Department of Computer Science

Washington University in St. Louis

- Yevgeniy Vorobeychik — Full professor, Department of Computer Science & Engineering

The Hong Kong Polytechnic University

- Xing Ai — PhD student

- Kai Zhou,Assistant Professor — Department of Computing Science

Photos from the paper