We’re pleased to announce a groundbreaking paper titled “Long Short-Term Fusion by Multi-Scale Distillation for Screen Content Video Quality Enhancement,” issue in this month, August 2025, in IEEE Transactions on Circuits and Systems for Video Technology (TCSVT). This is a paper collaborating with The Hong Kong Polytechnic University and Tsientang Institute for Advanced Study:

- Z. Huang, Y. -L. Chan, N. -W. Kwong, S. -H. Tsang, K. -M. Lam and W. -K. Ling, “Long Short-Term Fusion by Multi-Scale Distillation for Screen Content Video Quality Enhancement,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 35, no. 8, pp. 7762-7777, Aug. 2025, doi: 10.1109/TCSVT.2025.3544314.

Paper Link: https://ieeexplore.ieee.org/document/10898056

Paper Abstract

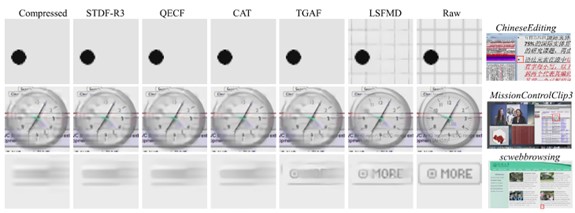

This research addresses a common issue in compressed screen content videos, such as those from webinars or online presentations, where video quality often suffers due to noticeable distortions, especially around the edges and during rapid scene changes.

Traditional methods struggle to improve these videos effectively, particularly during abrupt scene switches. Our proposed solution introduces a new approach that better handles these challenges.

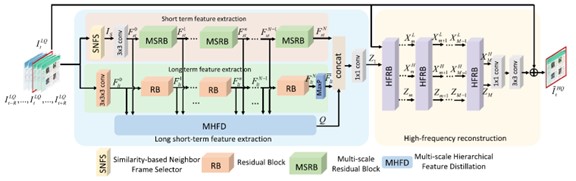

We developed two separate streams for feature extraction: one that captures long-term context and another that focuses on short-term details. This combination allows the system to efficiently track fast movements and quickly changing scenes. To further enhance video quality during these transitions, we included a smart neighbor frame selector that identifies the most relevant frames to use for improvement.

Additionally, our method employs a technique called multi-scale feature distillation, which helps refine and improve the important features we extract. We also created a special block designed to restore high-frequency details, ensuring that fine textures and edges are clear.

Our experimental results show that the Long Short-Term Fusion by Multi-Scale Distillation (LSFMD) method significantly improves the quality of compressed screen content videos, outperforming existing state-of-the-art techniques. This advancement promises to enhance the viewing experience for online content, making it clearer and more enjoyable for users.

We will keep advancing our collaboration with leading research institutions both locally and on the mainland to develop cutting-edge technologies!

The research team members

Hong Kong Chu Hai College

- Dr Harris Sik-Ho Tsang, Assistant Professor in the Department of Computer Science

The Hong Kong Polytechnic University

- Dr Ziyin Huang, PhD in the Department of Electrical and Electronic Engineering

- Dr Yui-Lam Chan, Associate Professor in the Department of Electrical and Electronic Engineering

- Dr Ngai-Wing Kwong, Postdoctoral Fellow in the Department of Electrical and Electronic Engineering

- Prof. Kin-Man Lam, Professor in in the Department of Electrical and Electronic Engineering

Tsientang Institute for Advanced Study

- Prof. Wing-Kuen Ling, Professor in Center for Integrated Circuits and Artificial Intelligence

Some photos of the authors and papers

Our proposed LSFMD structure, which contains long short-term feature extraction, multi-scale hierarchical feature distillation, and high-frequency reconstruction.

Our LSFMD has better visual quality compared with other state-of-the-art approaches. (Raw: the original uncompressed image)