We are pleased to announce that our recent paper, “Revisiting Adversarial Robustness of GNNs Against Structural Attacks: A Simple and Fast Approach,” has been published in the IEEE Transactions on Information Forensics and Security (TIFS) in December 2025. This research is a collaborative effort between our Department, The Hong Kong Polytechnic University, Shanghai Jiao Tong University, and the University of California, Irvine.

- Xing Ai, Yulin Zhu, Yu Zheng, Gaolei Li, Jianhua Li, Kai Zhou, “Revisiting Adversarial Robustness of GNNs Against Structural Attacks: A Simple and Fast Approach” in IEEE Transactions on Information Forensics and Security, vol. 21, pp. 446-459, 2025, doi: 1109/TIFS.2025.3641816.

Link to Paper: https://ieeexplore.ieee.org/document/11311611

Abstract

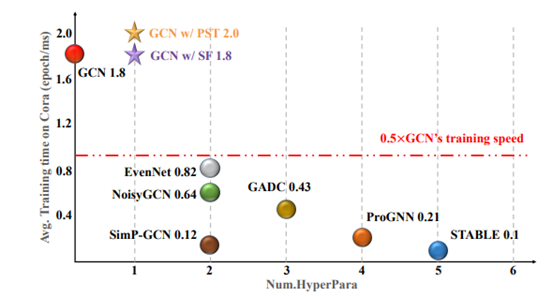

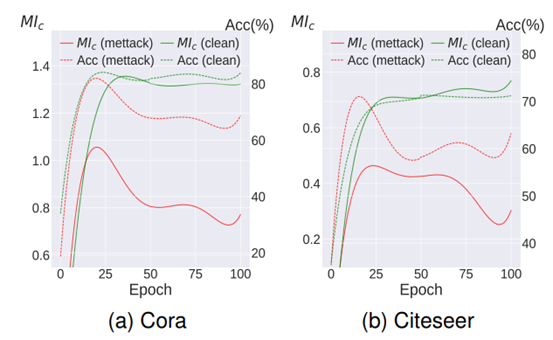

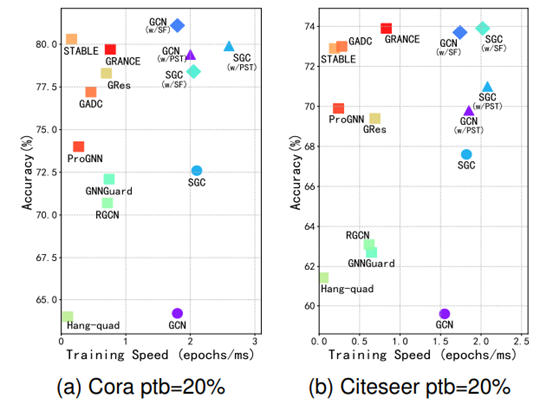

To defend against adversarial structural attacks on graphs, we analyze attacks through the lens of mutual information and discover the “pairwise effect”. This effect reveals that structural attacks effectively degrade the performance of victim GNNs when these GNNs receive the modified structure paired with the given node attributes as training input. Therefore, we propose a novel defense strategy that renders structural attacks ineffective by disrupting the pairing of modified structures and node attributes during the training of victim GNNs, which we call “disrupting the pairwise effect”. To implement this idea, we propose two simple yet effective training strategies: Structural Fine-Tuning (SF) and Progressive Structural Training (PST), which disrupt the pairwise effect through node attributes pre-training followed by structure fine-tuning and progressive structure training, respectively. Compared to existing robust GNNs, our strategies avoid time-consuming techniques, thereby improving the robustness of GNNs while enhancing training speed. Additionally, these strategies can be easily applied to a wide range of commonly used GNNs, including robust GNN variants, making them highly adaptable to different models and applications. We provide theoretical analysis of the proposed training strategies and conduct extensive experiments on various datasets to demonstrate their effectiveness. Datasets and codes of this paper are available at https://github.com/Xing-Ai1003/Revisiting-Adversarial-Robustness-of-GNNs

Research Team Members

Hong Kong Chu Hai College

- Dr. Zhu Yulin, Assistant Professor, Department of Computer Science

The Hong Kong Polytechnic University

- Dr. Ai Xing, PhD Student, Department of Computing

- Prof. Zhou Kai, Assistant Professor, Department of Computing

Shanghai Jiao Tong University

- Prof. Li Gaolei, Associate Professor, School of Electronic Information and Electrical Engineering

- Prof. Li Jianhua, Professor, School of Electronic Information and Electrical Engineering

University of California, Irvine

- Dr. Zheng Yu, Postdoctoral Researcher, Donald Bren School of Information and Computer Sciences

Some photos from the authors and papers